The Future of Inference Attacks: A New Era in Cybersecurity

Written on

Chapter 1: Understanding Inference Attacks

Have you prepared yourself for the emerging threat of inference attacks? This new attack vector is becoming a significant concern for cybersecurity professionals.

Photo by Jefferson Santos on Unsplash

Can you identify the nature of this attack? It targets the application layer, bypasses conventional defenses, and exposes the internal operations of applications. The root cause of such attacks often lies in applications that disclose excessive information through their communications.

If you thought this was about SQL injection attacks, you’re mistaken. Welcome to the realm of inference attacks aimed at AI and Machine Learning applications, which are emerging as the SQL injection vulnerabilities of the future. Just as SQL injection threats revealed significant blind spots for cybersecurity teams nearly two decades ago, inference attacks are set to expose similar weaknesses in AI systems.

Much like SQL injections arose from flaws in code requiring rectification at the source, inference attacks exploit weaknesses in the underlying AI algorithms. Are you feeling concerned yet?

Section 1.1: What Are Inference Attacks?

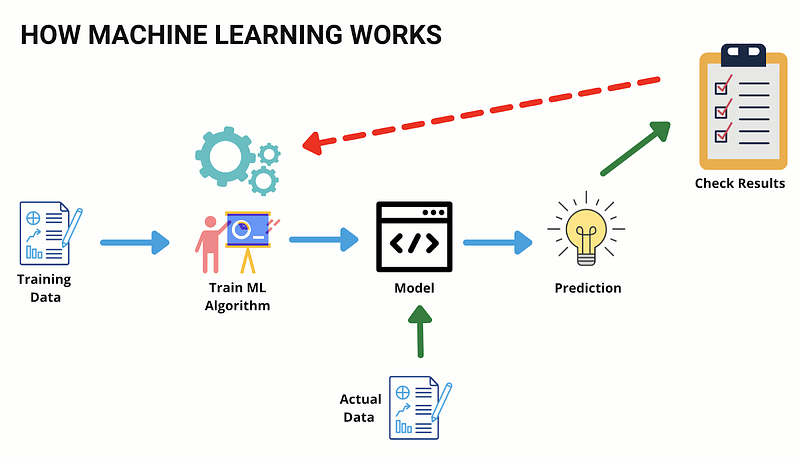

To grasp the concept of inference attacks, we first need to understand the workings of AI and Machine Learning systems. A Machine Learning (ML) system builds its knowledge over time by utilizing training data, allowing it to make informed decisions or predictions. The volume and quality of training data directly correlate with the accuracy of these predictions.

Source: Image by the author

Once deployed, ML systems typically offer public APIs, enabling users and other applications to access their capabilities. These models often operate in sensitive sectors such as healthcare and finance, where training on critical data like Cardholder Information or Personally Identifiable Information (PII) makes them prime targets for attackers.

Imagine an attacker aiming to uncover the following:

- What type of data was utilized to train the model?

- How does the ML system arrive at its decisions?

Inference attacks capitalize on the lack of transparency regarding this information. In such an attack, the perpetrator seeks to glean insights into the data used for training the model or the model's operational mechanics. Given that these models can be trained on highly sensitive data worth millions in intellectual property, the fallout can be catastrophic for organizations.

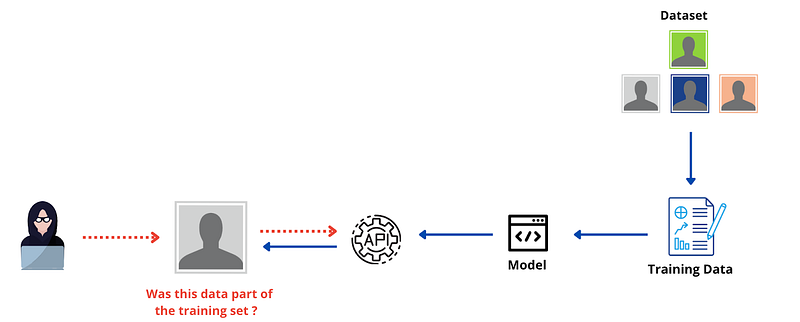

The most prevalent form of inference attack is known as Membership Inference (MI), where an attacker attempts to reconstruct the data used for training the model. ML models typically return responses as confidence scores, with higher scores indicating a closer match to the training data. With API access, an attacker can run records through the ML model and evaluate whether the model has been trained on specific datasets based on the output scores.

Source: Image by the author

If the attacker possesses the requisite skills, they may reverse engineer a model to extract information about its training dataset, which can have dire consequences if sensitive data is involved. Here are a few scenarios illustrating how such attacks can unfold:

- An attacker querying a model with a name or identifier to ascertain if an individual is on a hospital patient list.

- Discovering whether a patient is receiving specific medications.

- Submitting images to a facial recognition model to check if a particular face was part of the training data.

In advanced attacks, a malicious actor could even extract sensitive financial details such as credit card numbers or social security numbers.

Section 1.2: The Rise of ML as a Service

The increasing adoption of Machine Learning as a Service (MaaS) has broadened the attack surface in recent years. Many companies prefer not to develop models from the ground up, opting instead for cloud-based services that provide immediate functionality. While these services handle the heavy lifting, attackers can exploit vulnerabilities in ML models, potentially conducting Membership Inference attacks across multiple organizations if such weaknesses are discovered.

Regrettably, this scenario is not merely theoretical. Researchers from Cornell University have documented how inference attacks can be executed against these models.

Chapter 2: New Strategies for Mitigating Inference Attacks

Attacks targeting AI and ML models differ significantly from traditional cybersecurity threats and cannot be addressed in the same way. There isn't a straightforward "patch" to rectify an algorithm since the issue lies within the data it was trained on. Just as Application Security evolved from a neglected aspect to a specialized industry, it is likely that A.I. and Machine Learning security will follow a similar trajectory.

However, we can learn from past experiences and implement protective measures today:

- ML models should be designed to generalize when encountering training data; the scores for previously seen and unseen data should not diverge significantly, minimizing information leakage to attackers.

- The exposed APIs of ML models should incorporate throttling mechanisms to detect persistent malicious querying, akin to how multiple failed login attempts from a single IP can alert cybersecurity teams.

- Regular security assessments must include penetration testing of AI and ML models with specific attack scenarios developed for evaluation.

For more insights, consider exploring my discounted course on A.I. governance and cybersecurity.

Taimur Ijlal is an award-winning leader in information security, possessing over two decades of global experience in cybersecurity and IT risk management, particularly within the fintech sector. Connect with Taimur on LinkedIn or visit his blog for more insights. He also shares valuable content on his YouTube channel, “Cloud Security Guy,” focusing on Cloud Security, Artificial Intelligence, and cybersecurity career guidance.

The first video title is SQL Injection | Complete Guide - YouTube, which covers the fundamental aspects of SQL injection attacks, their implications, and preventative measures.

The second video title is DEF CON 17 - Joseph McCray - Advanced SQL Injection - YouTube, focusing on advanced techniques and strategies surrounding SQL injection attacks.