Dynamic Tables in Snowflake: Simplifying Data Transformation

Written on

Chapter 1: Introduction to Dynamic Tables

Recently, Snowflake unveiled its Dynamic Tables, a groundbreaking feature designed to ease the process of declarative data transformation pipelines. This announcement follows other significant enhancements aimed at data engineers, including the ability to read files using Java, Scala, or Python functions, as well as support for Snowpipe streaming replication.

Section 1.1: Enhancements in Data Integrations

Snowflake has rolled out new foundational elements that facilitate smooth data engineering workflows, primarily through the implementation of dynamic tables. These tables offer a more streamlined and efficient way to transform data, removing the complexity associated with task sequencing, dependency management, and scheduling.

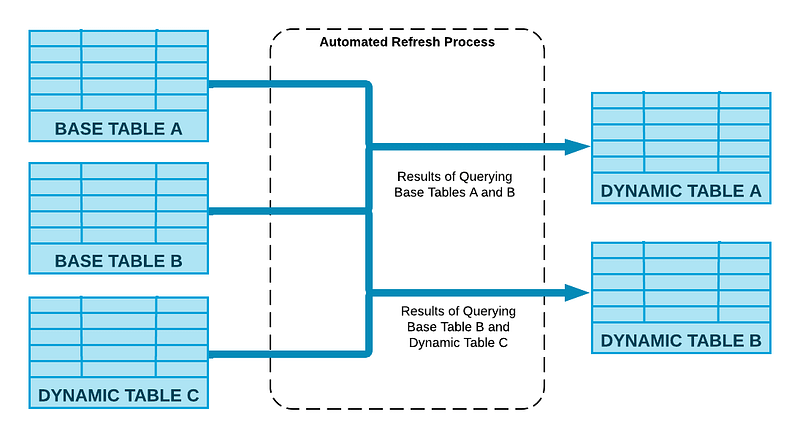

By defining the desired outcome of a data transformation with dynamic tables, Snowflake effectively handles the complexities of the pipeline. When a dynamic table is created, the user specifies the query for transforming data from one or multiple base or dynamic tables. An automated refresh process is then in charge of executing this query regularly, ensuring that the dynamic table reflects any updates made to the base tables.

Section 1.2: How Dynamic Tables Operate

Unlike traditional streams and tasks, dynamic tables leverage an automated system to track changes made to the base tables and integrate those modifications into the dynamic table. This process utilizes computing resources from the designated warehouse linked to the dynamic table.

When establishing a dynamic table, users can specify a desired "freshness" for the data, indicating the target lag time. For more comprehensive insights, refer to the official documentation linked below.

Section 1.3: Use Cases for Dynamic Tables

Potential applications for dynamic tables include:

- Eliminating the need for custom code, while managing data dependencies and refresh cycles.

- Simplifying the complexity associated with streams and tasks.

- Offering a less granular control over refresh scheduling.

- Materializing results from queries that involve multiple base tables.

Dynamic tables present a valuable new capability that can greatly simplify data preparation and transformation within ELT processes in your Snowflake Data Warehouse, serving as a solid alternative to conventional tasks.

Chapter 2: Further Learning

The first video, "Snowflake-Dynamic Tables - Make your Pipelines Easy!" provides an overview of how this feature can enhance data engineering workflows, focusing on ease of use and efficiency.

The second video, "Feature Flurry: Building Streaming Pipelines with Dynamic Tables," delves into building and optimizing streaming data pipelines using dynamic tables, showcasing practical applications and benefits.