# Unlocking Advanced Capabilities of GPT for Business Applications

Written on

Chapter 1: Exploring the Power of GPT

If you're engaging with this content, chances are you’ve already dipped your toes into the world of ChatGPT. Perhaps you've used it for paraphrasing emails or generating catchy captions for your social media posts. However, the capabilities of large language models (LLMs) like GPT extend well beyond these basic functions.

In reality, GPT can be leveraged for intricate applications within your productivity framework or business offerings. By crafting sophisticated prompts for multi-step processes, you can integrate these models with programming functions and external datasets, thereby maximizing their potential.

In this piece, I will outline several advanced applications of GPT and guide you on how to fully exploit its power in productivity and product development. We will delve into areas such as prompt engineering, templating, chaining, embeddings, and planning.

Section 1.1: The Art of Prompt Engineering

The quality of responses from a language model can vary significantly depending on how the query is phrased. The discipline of crafting prompts that yield specific and high-quality outputs is known as prompt engineering. Researchers have investigated numerous methods to enhance the performance of large language models.

Some effective techniques include:

- Contextual and Task Specification: Clearly define the context and the type of output you seek from the model (often termed instruct prompting).

- Few-shot Learning: By providing the model with a few examples of prompt-response pairs, you can substantially elevate response quality. Ensure these examples are diverse and reflective of the actual query.

- Chain-of-Thought (CoT) Prompting: This approach is beneficial for tasks requiring complex reasoning. By prompting the model to articulate a sequence of logical steps, you can guide it to the desired outcome. A useful phrase to incorporate is “Let’s think step by step.”

- Generated Knowledge/Internal Retrieval: Encourage the model to produce relevant knowledge before tackling the main query, which can enhance its reasoning capabilities.

Mastering these foundational techniques is crucial before progressing to more complex skills that enable the LLM to manage intricate tasks.

Section 1.2: Utilizing Prompt Templating

If you have a programming background, you may be familiar with the concept of defining functions for repetitive tasks. This same principle applies to LLMs. Each prompt can be seen as a function designed to achieve a specific outcome, where certain elements remain consistent while others may vary based on context.

For instance, if you want an LLM to provide an answer to a question based on a specific context using bullet points, a template could look like this:

Answer the question based on the provided context. Please respond using bullet points.

- -CONTEXT START - -

{context}

- -CONTEXT END - -

Question: {query}

You can dynamically substitute {context} and {query} into the template before sending it to the LLM, using string formatting or libraries like LangChain or Semantic Kernel.

Chapter 2: Prompt Chaining for Complex Problem Solving

In programming, functions can be interconnected, allowing the output of one to serve as the input for another. This concept is equally applicable to LLM prompts, where each call can be treated like a function with its own input and output.

For example, using libraries like LangChain, you can easily create chains of prompts to tackle complex tasks, such as writing a joke followed by an explanation of its humor. This method allows for seamless connections between multiple prompts, enabling you to navigate intricate processes efficiently.

Section 2.1: Implementing Embeddings for Enhanced Processing

To enable LLMs to interpret your input and generate meaningful output, text must be transformed into vectors in a high-dimensional space, a process known as embeddings. This technique allows for extensive preprocessing before engaging the LLM, overcoming context window limitations and facilitating access to a memory or database.

Several pre-built embedding methods, such as Word2Vec and GloVe, are available. OpenAI also provides embedding capabilities through its API, allowing for various applications, including text comparison and clustering.

By converting your documents into embeddings, you can create a vector index or database that helps retrieve the most relevant information based on similarity to your prompt. This effectively gives the LLM a memory that extends beyond the current interaction.

Section 2.2: Action Planning with GPT

Another sophisticated application of GPT is in action planning. By defining a goal, you can instruct GPT to outline the steps necessary to achieve that goal using a range of available actions (which could include APIs or functions).

This iterative planning process can significantly enhance program flexibility, as you won't need to write extensive business logic. Instead, you can focus on essential steps or lower-level functions required to meet user needs.

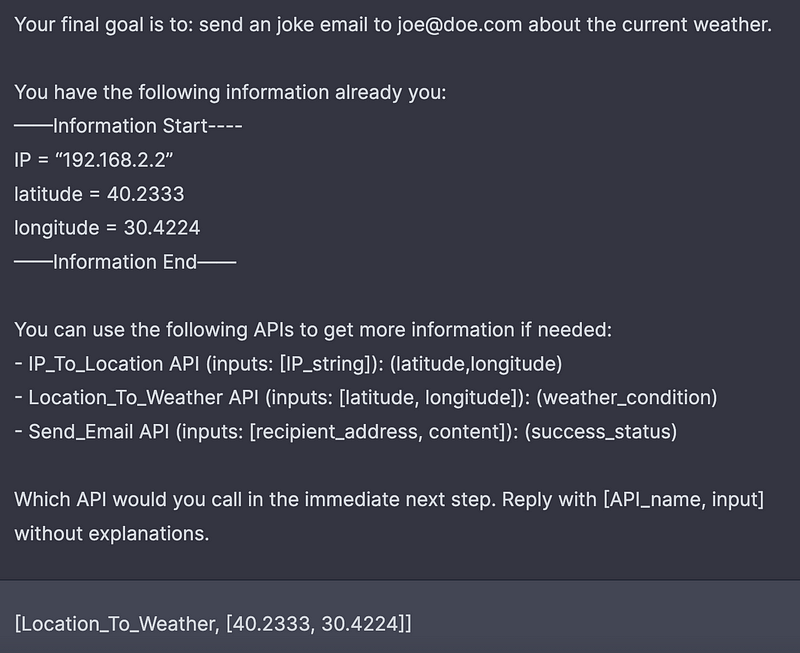

For example, consider three APIs: IP_To_Location, Location_To_Weather, and Send_Email. By providing GPT with these options, you can guide it to determine which API to call next based on the information it has, allowing it to reach the final goal step-by-step.

Final Thoughts

The capabilities of LLMs like GPT are truly remarkable and are advancing at an unprecedented pace. OpenAI's recent announcement about plugin features for GPT, which enable internet access, code execution, and integration with third-party APIs, further underscores this potential. The techniques outlined in this article, combined with these new plugin functionalities, could revolutionize application development and user interaction, paving the way for innovative business models.

For those interested in deepening their understanding of GPT/LLM applications and addressing challenges such as memory, searching, and caching, consider exploring additional resources like the Cookbook for solving common problems in building GPT/LLM applications.